After going through the cal procedure it is worthwhile checking the impedance of a sample resistor or two (preferably a separate 1% resistor not used during cal, and perhaps of value a decade below and above the reference resistor value). If the impedance plot is flat for impedance and phase and within 1-2% of expected value then you should be fine for basic capacitors. How are you 'identifying' the test capacitor as '60+nF'? You can click the mouse on the impedance plot at a selected frequency and read the bottom left calculated value (best to do that at the frequency used by the LCR meter if you want to compare those two measurement techniques), as well as use the component model function (which requires the part to exhibit certain impedance characteristics over a given frequency span).

-

AUDIO VIDEO PROCESSING, SETUP & ENVIRONMENTOfficial REW (Room EQ Wizard) Support Forum Audiolense User Forum Calibration Equipment Auto-EQ Platforms / Immersive Audio Codecs Video Display Technologies / Calibration AV System Setup and Support Listening Room / Home Theater Build Projects Room Acoustics and Treatments AV Showcase Movies / Music / TV / Streaming

-

AUDIO VIDEO DISCUSSION / EQUIPMENTHome Theater / Audio and Video - Misc Topics Essence For Hi Res Audio AV Equipment Advice and Pricing Awesome Deals and Budget AV Equipment AV Receivers / Processors / Amps UHD / Blu-ray / CD Players / Streaming Devices Two Channel Hi-Fi Equipment DIY Audio Projects Computer Systems - HTPC / Gaming HD and UHD Flat Screen Displays Projectors and Projection Screens AV Accessories Buy - Sell - Trade

Navigation

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

More options

maximum range of impedance measurement

- Thread starter JLM1948

- Start date

Been away from the computer for some time and been thinking about it all.

The method of analyzing the difference between the reference and the DUT, which is perfectly fine for loudspeakers, where the ratio of max to min impedance is about 20, is not adequate for measuring caps and inductors, where the ratio is 1000 (my choice). The method's accuracy implies that the difference is within the same order of magnitude as the stimulus signal. In the case of measuring let's say a 1H inductor, where the impedance varies from about from about 120 ohms to 120k, the series resistor should be high enough to result in a significant drop when the reactance of the inductor is at its maximum. So a resistor of minimum 12 kohms should be used.

At the other end of the spectrum, the signal across the inductor would be about 40dB below the stimulus, which implies that the S/N ratio of the ADC is at least 65dB, for decent accuracy, which is not always guaranteed.

For this very reason, teh series resistor should be much smaller, typically of the same order of magnirtude as the lowest inductor impedance, about 120 ohms.

Clearly these are irreconciliable constraints. Using the geometrical mean does not work because it's not the voltage ratio that isused, it's the difference.

Of course, it is possible to remedy that by splitting the measurement in two or threes passes, each one with a different gain on the channel that measures the DUT.

Another possibility is switching the series resistor, which would imply recalibrating the test at each iteration. The adaptatot I built alows switching the series resistor; without recalibration, I get mediocre results (of course I need to multiply the displayed result by a factor 10 or 100).

Another issue with this method is that the reactive DUT is not submitted to the full test voltage. Knowing that inductors (and capacitors in a lesser measure) vary with applied voltage, it's a not negligible drawback.

My conclusion is that a different method should be used.

Measuring current through a grounded reference resistor, fed by the DUT, would allow to change the gain in order to offer the best resolution. The resistor would need to be at least 20x smaller than the lowest impedance for about 5% accuracy. For the aforementioned 1H inductor, that would be about 6 ohms, and the resulting voltage may vary between -26 and -86dBu, which would require a variable gain preamp of not terrible difficulty. A chip like THAT 1510 can provide 60dB of gain with an EIN of <-130dBu when driven from a 6 ohm source impedance.

Alternatively, using a larger stimulus (a typical SS stage can deliver about 10Vrms) puts less pressure on low-noise operation.

Scaling the resistor for different ranges should be very straightforward.

Actually, it's the method I have used till now, using my jurassic generator and analyzer. I'll just have to revamp it with my REW rig. The only problem is I don't have the IT knowledge to make it user friendly.

One possible drawback of this method is that it doesn't work with grounded loads. But I don't know why the "loudspeaker" method has been preferred, because loudspeakers are floating. Being capable of measuring grounded loads is more apt at measuring input and output impedances of non-floating equipment though. But I don't think it's something people do regularly.

BTW, the "loudspeaker" method is not sanctified by e.g. Klippel; in their test equipment, impedance is measured via a current probe, which allows to do Z tests at nominal power and in noisy environment.

The method of analyzing the difference between the reference and the DUT, which is perfectly fine for loudspeakers, where the ratio of max to min impedance is about 20, is not adequate for measuring caps and inductors, where the ratio is 1000 (my choice). The method's accuracy implies that the difference is within the same order of magnitude as the stimulus signal. In the case of measuring let's say a 1H inductor, where the impedance varies from about from about 120 ohms to 120k, the series resistor should be high enough to result in a significant drop when the reactance of the inductor is at its maximum. So a resistor of minimum 12 kohms should be used.

At the other end of the spectrum, the signal across the inductor would be about 40dB below the stimulus, which implies that the S/N ratio of the ADC is at least 65dB, for decent accuracy, which is not always guaranteed.

For this very reason, teh series resistor should be much smaller, typically of the same order of magnirtude as the lowest inductor impedance, about 120 ohms.

Clearly these are irreconciliable constraints. Using the geometrical mean does not work because it's not the voltage ratio that isused, it's the difference.

Of course, it is possible to remedy that by splitting the measurement in two or threes passes, each one with a different gain on the channel that measures the DUT.

Another possibility is switching the series resistor, which would imply recalibrating the test at each iteration. The adaptatot I built alows switching the series resistor; without recalibration, I get mediocre results (of course I need to multiply the displayed result by a factor 10 or 100).

Another issue with this method is that the reactive DUT is not submitted to the full test voltage. Knowing that inductors (and capacitors in a lesser measure) vary with applied voltage, it's a not negligible drawback.

My conclusion is that a different method should be used.

Measuring current through a grounded reference resistor, fed by the DUT, would allow to change the gain in order to offer the best resolution. The resistor would need to be at least 20x smaller than the lowest impedance for about 5% accuracy. For the aforementioned 1H inductor, that would be about 6 ohms, and the resulting voltage may vary between -26 and -86dBu, which would require a variable gain preamp of not terrible difficulty. A chip like THAT 1510 can provide 60dB of gain with an EIN of <-130dBu when driven from a 6 ohm source impedance.

Alternatively, using a larger stimulus (a typical SS stage can deliver about 10Vrms) puts less pressure on low-noise operation.

Scaling the resistor for different ranges should be very straightforward.

Actually, it's the method I have used till now, using my jurassic generator and analyzer. I'll just have to revamp it with my REW rig. The only problem is I don't have the IT knowledge to make it user friendly.

One possible drawback of this method is that it doesn't work with grounded loads. But I don't know why the "loudspeaker" method has been preferred, because loudspeakers are floating. Being capable of measuring grounded loads is more apt at measuring input and output impedances of non-floating equipment though. But I don't think it's something people do regularly.

BTW, the "loudspeaker" method is not sanctified by e.g. Klippel; in their test equipment, impedance is measured via a current probe, which allows to do Z tests at nominal power and in noisy environment.

Last edited:

John Mulcahy

REW Author

- Joined

- Apr 3, 2017

- Posts

- 8,421

If you say so.The method of analyzing the difference between the reference and the DUT, which is perfectly fine for loudspeakers, where the ratio of max to min impedance is about 20, is not adequate for measuring caps and inductors, where the ratio is 1000 (my choice).

John Mulcahy

REW Author

- Joined

- Apr 3, 2017

- Posts

- 8,421

100 ohms. I don't have many inductors but got good results with those I do have, others have made more measurements.

JLM1948, I've been able to accurately measure the impedance of reference inductors down to 10uH, and larger cored inductors in to the Henry range - all with a 100 sense resistor. I'd suggest you need to explore further your measurement setup, and step through from the simplest measurements using the simplest parts to confirm your results are consistent. Perhaps start with a range of test resistors over many magnitudes of values, and over the entire frequency span of your soundcard and output voltage span in the simplest direct loopback style format, and then as you have added your own extra measurement jig circuitry.

Believe me, I have done that umpteen times. I have tested rather large inductors so far, so maybe I need to use a larger series resistor than the 1k I have used so far.

I see John has measured a 10nF cap with a 100r resistor, so maybe I was too far off measuring a 60nf cap with a 1k series resistor.

My measurements appear to be poluted by noise.

I see John has measured a 10nF cap with a 100r resistor, so maybe I was too far off measuring a 60nf cap with a 1k series resistor.

My measurements appear to be poluted by noise.

Even using the simplest, shortest signal paths from soundcard output to the two signal inputs?My measurements appear to be poluted by noise.

Popular tags

20th century fox

4k blu-ray

4k uhd

4k ultrahd

action

adventure

animated

animation

bass

blu-ray

calibration

comedy

comics

denon

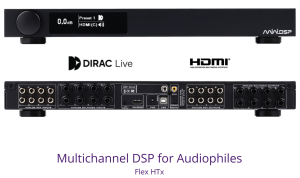

dirac

dirac live

disney

dolby atmos

drama

fantasy

hdmi 2.1

home theater

horror

kaleidescape

klipsch

lionsgate

marantz

movies

onkyo

paramount

pioneer

rew

romance

sci-fi

scream factory

shout factory

sony

stormaudio

subwoofer

svs

terror

thriller

uhd

ultrahd

ultrahd 4k

universal

value electronics

warner

warner brothers

well go usa